A UK-registered technology company with British directors is behind a global platform used by neo-Nazis to upload footage of racist killings.

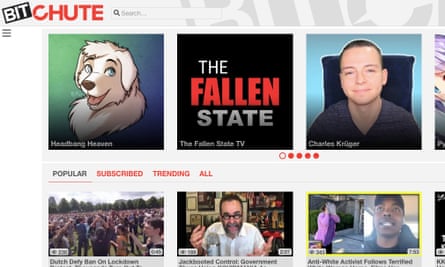

BitChute, which was used in the dissemination of far-right propaganda during the protests in London and elsewhere this month, has hosted films of terror attacks and thousands of antisemitic videos which have been viewed over three million times.

The platform has also hosted several videos from the proscribed far-right terrorist group National Action, now taken down.

Concerns about BitChute have been flagged in a new report, Hate Fuel: The hidden online world fuelling far right terror, produced by the Community Security Trust (CST), a charity set up to combat antisemitism.

In response to the report, the company, based in Newbury, Berkshire, said in a tweet that it blocks “any such videos, including incitement to violence”.

But the platform was still showing the full footage of the 2019 Christchurch mosque shootings and an attack on a German synagogue until the Observer brought the videos to its attention.

The far-right activist Tommy Robinson has a channel on BitChute with more than 25,000 subscribers.

When far-right protesters recently descended on London and several other cities, BitChute’s comment facility carried numerous racist postings on Robinson’s channel, many of them making derogatory claims about George Floyd, whose death after being restrained by police in the US city of Minneapolis has sparked worldwide protests.

“Extremists know that they can post anything on BitChute and it won’t be removed by the platform unless they are forced to do so,” said Dave Rich, director of policy at the CST. “Some of the terrorist videos we found on BitChute had been on the site for over a year and had been watched tens of thousands of times.”

He added: “It’s no surprise, therefore, that the website is a cesspit of vile racist, antisemitic neo-Nazi videos and comments. This is why there need to be legal consequences for website hosts who refuse to take responsibility for moderating and blocking this content themselves.”

BitChute is one of several platforms alarming those who monitor the far right.

Another, Gab, created in 2016, has a dedicated network of British users called “Britfam” that has 4,000 members and which far-right extremists use to circulate racism, antisemitism and Holocaust denial.

Last week the chief executive of Gab, Andrew Torba, sent an email to users attacking what he alleged was the “anti-white, anti-Trump and anti-conservative bias” on more mainstream social media platforms.

Torba wrote: “If you are a conservative. If you are straight. If you are white. If you are a Christian: you should not be on Facebook, Twitter, or any other Big Tech product. Please understand: you are the enemy to these people. They hate you. They literally believe that you are a terrorist for wearing a Maga [Make America Great Again] hat. This isn’t hyperbole.’

CST analysis found that the messaging service Telegram hosts images and posts celebrating the British terrorists Thomas Mair and David Copeland, and other far-right terrorists.

Billing itself as an alternative to Twitter, Parler is another social media platform rapidly gaining popularity, which describes itself as “a non-biased free-speech driven entity”. Though not included in the CST report, it is increasingly synonymous with the alt-right. The political commentator Katie Hopkins moved to Parler after being permanently suspended from Twitter on 19 June for contravening its ban on “hateful content”.

Within a week Hopkins had already accrued 215,000 of the platform’s 1.2 million users. Extremism experts caution that deplatforming such individuals is akin to “whack-a-mole” in the sense that removing them from one platform often drives them to less regulated social media sites.

Frequently a person suspended from a major social media platform such as Facebook or Twitter gravitates on to smaller platforms that tolerate more extreme content and offer a licence to spread more toxic narratives and use increasingly inflammatory language.

On Saturday Hopkins used Parler to call on the government to “stop the invasion” when referring to small boat crossings of migrants.

Many of these newer platforms promote conspiracy theories about Jews and other minorities, according to the CST. A familiar trope is the “great replacement” conspiracy theory, which alleges that Jewish organisations and synagogues are using mass immigration to undermine white western nations.

The CST analysis suggests that the filming and livestreaming of terrorist attacks has become an integral part of far-right planning, and successful perpetrators are celebrated and glorified via striking imagery and memes.

The authors of Hate Fuel say: “Our report shows how a global movement of violent neo-Nazis uses social media to incite hatred, celebrate murder and turn terrorists into heroes. Far-right terrorism poses an urgent and lethal threat to Jews and other minority communities, and it is fuelled by a vast amount of online incitement hosted on websites that don’t seem to care. We need a global effort by governments, law enforcement and the tech industry, similar to their previous response to Isis propaganda, to turn this around.”

Ray Vahey, founder and chief executive of BitChute, defended the platform.

“BitChute is a startup that’s growing fast in a market that has been monopolised by YouTube,” he said. “It’s very challenging, but we are having an impact and it’s vital to consumers that companies like ours bring much-needed competition to a market like this.

“One of the difficult challenges is moderation and this has been the case for Google as well. We continue to develop tools to ensure we can remove any content that does not meet our standards sooner.”

BitChute said it had been in contact with UK counter-terrorism police and other organisations, who it believed could help it “improve our processes”.

The platform said that while it shared the CST’s goal of reducing violence, deplatforming “the more extreme sides of the political spectrum” pushes “people to parts of the web where they could no longer experience a variety of viewpoints”.

When asked if he seriously defended his platform streaming videos of terrorist atrocities, Vahey did not respond.