José Luis Hernández, computer scientist: ‘It’s very easy to trick artificial intelligence into launching an attack’

The IT expert’s project was recently chosen by Spain’s BBVA Foundation as a recipient of its research promotion program

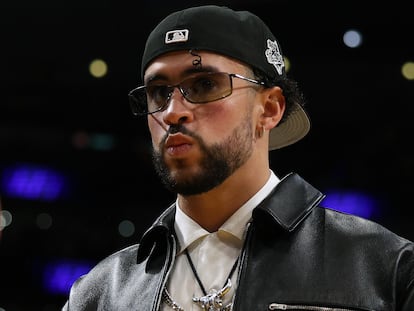

José Luis Hernández Ramos (Murcia, 37) is a Marie Skłodowska-Curie researcher at the University of Murcia, Spain where he also received his bachelor’s, master’s and doctoral degrees in computer science. “When I was a kid and would play with the little machines like Game Boys, I always wondered how it was possible that all those images could be produced just from inserting a games cartridge,” he says. In the course of his career, he has worked as a scientific officer for the European Commission and has published more than 60 research papers, as well as collaborating for five years with the European Union Agency for Cybersecurity (ENISA) and the European Cybersecurity Organization (ECSO), where he began to seek out work that would have real-life impacts. “Any researcher has to ask what artificial intelligence can do in their field,” he says. Now, Hernández’s “Gladiator” project is one of 58 research initiatives selected for the BBVA Foundation’s Leonardo 2023 grant program to develop an artificial intelligence tool capable of detecting cybersecurity threats and analyzing malicious software.

Question. How would you summarize the goal of the Gladiator project?

Answer. The project seeks to apply or use a large language model, like ChatGPT, Bard, or Llama, to understand how we can use these programs to address cybersecurity problems. When we want to adapt one of these models to a certain domain, such as cybersecurity, we need to fit the model to a terminology related to that particular discipline. We want to understand how to adapt these models to detect an attack and, at the same time, adapt them with cybersecurity information to improve their performance and make them capable of solving problems of this type. The project will run until March 2025.

Q. How will the language models be adapted to the needs of the project?

A. You take information related to cybersecurity, with databases containing threat information, and you train or tune your model with that information. This is how you will be able to improve your understanding of what a cybersecurity threat is.

Q. How does artificial intelligence detect cybersecurity threats and how does it combat them?

A. Many artificial intelligence-based systems are based on learning what is and what is not an attack. For example, using data sets related to network connections in a certain environment. What we’re looking for with the project is to be able to analyze cybersecurity information that comes in text format, that may be related to vulnerabilities or that may be found on social media and other sources, and then determine whether it is a threat or not.

Q. What is the difference between the systems used in the past to combat cybersecurity and those used today?

A. Security systems need to be increasingly intelligent to detect potential threats, by considering artificial intelligence techniques. In the past, these systems detected attacks by looking for known threats in databases. Systems need to evolve to be able to identify attacks that they do not know about.

Q. And what kinds of attacks could be prevented or identified?

A. The application of artificial intelligence techniques in cybersecurity will allow us to improve the identification and detection of a wide variety of attacks. A phishing attack is a clear example of how the use of language modeling can help by analyzing the text or content that appears in an email. We can identify if multiple devices are colluding to launch an attack, and also if it’s coming from not just one source, but several.

Q. And in the home context, how can artificial intelligence be used to combat attacks?

A. We now have 24-hour access to artificial intelligence through tools like ChatGPT, and it gives us the ability to promote cybersecurity education and awareness. Everyone can ask the tool about how to protect themselves or how to configure a device to be less vulnerable. It’s important to know that the models are not perfect, we still need to test the results and the answers they provide.

Q. Would artificial intelligence help detect if an application has been tampered with?

A. Absolutely. It would help detect, for example, whether an application is fake or malicious. In fact, one of the things that you’re also seeing with this kind of analysis of applications, of code and software in general, are language model initiatives to analyze software code.

Q. Is artificial intelligence capable of detecting data theft or disinformation?

A. Yes, although attackers are getting more and more creative and using more sophisticated tools.

Q. Does artificial intelligence help both those who want to create disinformation and those who want to fight it?

A. Yes, it is a double-edged sword. If it’s in the wrong hands, it can be used to launch increasingly sophisticated attacks. Therein also lies the danger of the access that you now have, in general, to artificial intelligence systems, such as ChatGPT or others.

Q. How can a tool like ChatGPT be used to define or generate an attack?

A. When you ask a system like ChatGPT to generate an attack for you, the first thing it tells you is that it won’t generate it because it can lead to a cybersecurity problem. But it’s quite easy to trick the tool, by telling it that you need to generate the attack in order to study it, because you want your system to be more robust, or you want to teach the attack in a class. In those cases, the system will give you the answer.

Q. Will the project system allow for designing a tool without sharing sensitive data?

A. This research project is an attempt to try to understand the problems and limitations so that the language model can be designed in a decentralized way. Right now, a model is trained and tuned with various sources of information, such as what I give it myself when I interact with the system. The idea is that this process is decentralized, and instead of having to share sensitive information, related information can be shared, but without having to send information about the specific vulnerability for the system to identify an attack.

Q. What is the goal you hope to achieve with your project when you complete it in 2025?

A. To improve our ability to understand language models that can help address cybersecurity problems, to create a system that helps us identify an attack, to understand how and why it occurred, and to look for relationships between different attacks that can help predict whether my specific system will be attacked. We also want to know how artificial intelligence is able to generate measures that can addresses and resolves this cybersecurity attack, for example, by automatically implementing a security patch.

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition